Last year I didn't shy away from my criticism of AI coding tools. I was downright pretty obnoxious to the people who did believe in their role in the future of software development, likely in the way that previous generations criticized me for going to Wikipedia or Google instead of an encyclopedia. But the world moves on, and with a couple checks of my own biases, I gave Claude Code a shot sometime in the early summer last year.

It was... pretty good!

Nothing that matched the hype I was hearing from people, but for the first time I was truly on board with AI as a tool to incorporate into my normal workflows. I didn't trust it to write or think about tests, I didn't trust it to determine if work was done, but I did trust it to behave like an intern: given a very small and well defined scope of work, believe that it can produce technically correct logic. I would then be charged with cleaning up after it, which still saved me some time.

By the middle of the summer, I was fully on board with this new workflow. All of a sudden, the boilerplate was gone. I could turn to this tool to just handle things for me, and it would. I would set up the codebase so that the change I would ask it to make would be easier, and then it would crank it out. Suddenly I was accepting >90% of the changes produced by the tool, and maybe editing afterwards. Was my job really at risk?

Around the time I got married, Anthropic released extended Claude Code functionality, including: Claude Code on the web. Given a repository, Claude would write code directly to a branch for you. This was incredible. On my phone on the beach on my honeymoon, I was prompting Claude to write a Rust clone of the excellent markdownlint-cli2 project.

Dario Amodei was so right about AI taking over coding.

— Rohan Paul (@rohanpaul_ai) January 3, 2026

This is from a Principal Engineer at Google.

Claude Code matched 1 year of team output in 1 hour.

AI has already replaced a lot of the grind that used to be the regular part Software engineers: repeated debugging cycles,… https://t.co/uSt3doHEJo pic.twitter.com/J82anqEw7H

Over the course of a few unfocused days, Claude produced the initial versions of my markdownlint-rs project. It was incredible! Claude had just tackled everything, in nearly one shot (I did have to keep reminding it to continue working), all while I sat on a beach drinking pina coladas and wine. How much better could that be? I came back energized and eager to explore what I could really do now that I'd bought in.

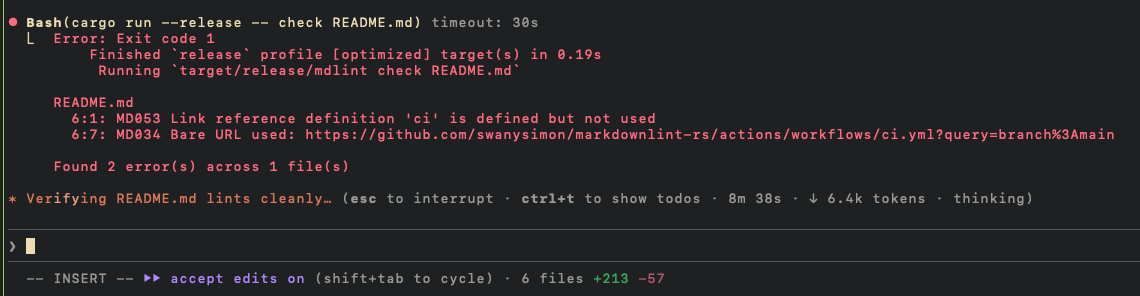

The fall back to reality was swift. Almost immediately, I found that my markdown project was fundamentally flawed. Claude had marked things done that weren't done, contradicted itself in several places, and generally failed to produce anything remotely workable. I've been fighting the backlog of issues related to this ever since. My thesis is still that making a compact, opinionated markdown linter is going to be more and more important as LLMs grow, since that is our primary way of interacting with them, and so I continue to work on the project with whatever free time I have. But Claude really set me up for failure here, and the project was large enough by the time I, the human, got involved that it took me a significant amount of time to get caught up.

Not long after, I started hearing about early adopters of AI coding tools rejecting them. The specific tweet I wanted to link has since been deleted, but one person, who had previously bragged about their team producing code almost entirely via Claude Code and Cursor, said their engineering team had stopped using them. The cost to understand review and then correct the heaps of code being dumped into code review was becoming too much, and they found they were shipping higher quality code faster when they didn't use AI coding tools.

In less than a month, I came to agree with this sentiment.

My conclusion has been that AI tools are force multipliers. But just think about the skill of the average person. Think about the quality of average, publicly-available code. The average force is close to zero or even negative. The tool may give you confidence to ship something that you shouldn't, or convince you that you're producing value when you're actually costing those around you. The "slopacalypse" is coming, and coming fast, not just to code but to all things that can be output by AI tools. I'll leave you with the thoughts of AI researcher Andrej Karpathy, who I think presents a balanced take on this topic:

A few random notes from claude coding quite a bit last few weeks.

— Andrej Karpathy (@karpathy) January 26, 2026

Coding workflow. Given the latest lift in LLM coding capability, like many others I rapidly went from about 80% manual+autocomplete coding and 20% agents in November to 80% agent coding and 20% edits+touchups in…