When you have to store and retrieve data from a database, do you choose to execute SQL statements yourself or do you use an object-relational mapper (ORM) to abstract away those calls completely? My answer to that question has been "SQL, obviously" my entire career; ORMs have historically had horrific performance and obscured the actual queries being executed, making it difficult to figure out exactly where things went wrong. They'll often build in features that you don't actually want, like keeping their own cached history of the object in memory, which can prevent it from storing new data when the cache isn't kept in sync. The nearly universal advice in the industry has been: use actual SQL, or a type checked SQL like jOOQ to execute your database interactions.

So what do you do when your team starts using ORM features?

I've largely transitioned to a management role recently, which means I'm not very involved in the codebases at my company anymore. But in the last few weeks I've had the chance to poke around and make some changes, and what I've seen has been shocking: very experienced developers have started using ORM features from SQLAlchemy, a very popular Python ORM and a SQL toolkit library.

I approached with strong skepticism, but after some time working on the queries myself, I've come around to some of the features provided.

Setup

For the rest of the post, I'll be working with the following tables. It's a silly example, but we'll be looking at employees and their addresses. Each employee can have one address per type, which might be "office" or "home". In PostgreSQL, the schema declaration would look something like:

CREATE TABLE employee (

id BIGSERIAL PRIMARY KEY,

name TEXT NOT NULL,

created TIMESTAMP NOT NULL

);

CREATE TABLE employee_address (

employee BIGINT NOT NULL REFERENCES employee (id) ON DELETE CASCADE,

address TEXT NOT NULL,

type TEXT NOT NULL,

PRIMARY KEY (employee, type)

);In SQLAlchemy, we might wrong the objects as something like (ignoring imports, this is not meant to be a tutorial):

class Employee(Base):

__tablename__ = "employee"

id: Mapped[int] = mapped_column(Integer, Sequence("employee_id_seq"), primary_key=True)

name: Mapped[str] = mapped_column(nullable=False)

created: Mapped[datetime] = mapped_column(default=utcnow(), nullable=False)

class EmployeeAddress(Base):

__tablename__ = "employee_address"

employee: Mapped[int] = mapped(Integer, ForeignKey(Employee.id, ondelete="CASCADE"), nullable=False, primary_key=True)

address: Mapped[str] = mapped_column(nullable=False)

type: Mapped[str] = mapped_column(nullable=False, primary_key=True)Loading all addresses

In this particular scenario, I wanted to load all addresses for each employee, and actually wanted to have the address load every time I grabbed an employee. The SQL would either have to be split into 2 queries (one to load the employees, then the other to load all their addresses) and then combined in memory in Python, or I could follow my strategy from a previous post and use some semi-fancy JOIN operations to get everything in one query.

If you're not familiar with SQL, the latter can be confusing, though I would argue it's actually not too complex if you take even a little bit of time. And as I've covered before, the former can be disastrous to your performance. It turns out ORMs have a way to work with these relationships directly and abstract this away from you!

class Employee(Base):

# everything as before

addresses: Mapped[list["EmployeeAddress"]] = relationship()But... it doesn't actually load by default. In fact, if you try to access the addresses field on the employee, you'll get an error! This is, in my opinion, a gigantic footgun. It's incredible unintuitive; if I select(Employee).where(Employee.id == <number>, I expect all the fields on the employee to load. Instead, I have to remember to add ORM loader options to my select statement. In this case, my statement looks like select(Employee).where(Employee.id == <number>).options(joinedload(Employee.addresses). That extra clause makes all the difference. If my codebase didn't have good test coverage, I never would have found this issue.

One thing I will give SQLAlchemy credit for here: they make it very easy to intuit exactly what query is going to be run. The joinedload will run LEFT OUTER JOIN, and if that's not what I want, there are other ways to coerce the data load into other strategies.

Storing nested fields

Now, how do you store this data? Instead of storing the data for the employee, then updating all the addresses, SQLAlchemy makes it easy!

new_employee = session.merge(employee)It couldn't be simpler. Staring at this, I found myself doubting all those years of writing INSERT INTO employee VALUES ... ON CONFLICT UPDATE SET ... statements. Having something that will automatically handle new fields (one thing I've definitely missed in the past) is an incredible feature.

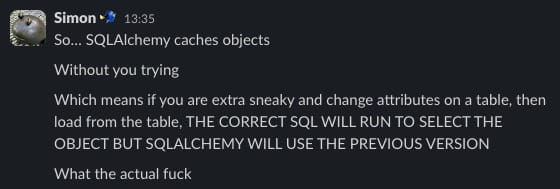

But... my tests weren't showing the correct object coming back from the merge. After turning on every bit of debugging I could find, I saw SQLAlchemy triggering the updates and inserts, and even the final select statement. In fact, I saw the rows coming back from the database, yet the in-memory object in Python was still showing the wrong information. What gives?!?

The fix was trivial in the end:

class Employee(Base):

# everything as before

addresses: Mapped[list["EmployeeAddress"]] = relationship(cascade="all, delete-orphan")The problem is that now I can't trust anything that SQLAlchemy does in the ORM layer without significant testing.

Conclusion

My suggestion to the world is still the same as my previous advice: use plain SQL as much as possible. But I will give SQLAlchemy credit: the ORM layer is significantly better than it was in the past, and they worked hard to make the behavior predictable and tunable.

Most engineers don't want to think about their query patterns. You'll notice I didn't declare any indexes on my tables; that's actually pretty standard for the average database I've run into. For these people, SQLAlchemy's ORM actually provides a high quality way to abstract away the underlying database. The merge is better than trying to get them to write their own upsert logic (despite my issues with it), and being able to automagically load related fields with a simple field declaration is an incredible feature.

I'm now a tentative user of the ORM features in SQLAlchemy. I still run my select statements as selects instead of queries, I still filter with where clauses instead of filters. But I've appreciated how much boilerplate it's been able to remove from some of my queries, and now I'm nearly a convert. Nearly